Last month at GDC 2019, NVIDIA revealed that they would finally be enabling public support for DirectX Raytracing on non-RTX cards. Long baked into the DXR specification itself – which is designed encourage ray tracing hardware development while also allowing it to be implemented via traditional compute shaders – the addition of DXR support in cards without hardware support for it is a small but important step in the deployment of the API and its underlying technology. At the time of their announcement, NVIDIA announced that this driver would be released in April, and now this morning, NVIDIA is releasing the new driver.

As we covered in last month’s initial announcement of the driver, this has been something of a long time coming for NVIDIA. The initial development of DXR and the first DXR demos (including the Star Wars Reflections demo) were all handled on cards without hardware RT acceleration; in particular NVIDIA Volta-based video cards. Microsoft used their own fallback layer for a time, but for the public release it was going to be up to GPU manufacturers to provide support, including their own fallback layer. So we have been expecting the release of this driver in some form for quite some time.

Of course, the elephant in the room in enabling DXR on cards without RT hardware is what it will do for performance – or perhaps the lack thereof. High-quality RT features already bog down NVIDIA’s best RTX cards that do have the hardware for the task, never mind their non-RTX cards, which are all either older (GeForce 10 series) or lower-tier (GeForce 16 series) than the flagship GeForce 20 series cards. This actually has NVIDIA a bit worried – they don’t want someone with a GTX 1060 turning on Ultra mode in Battlefield V and wondering why it’s taking seconds per frame – so the company has been on a campaign both at GDC and ahead of the driver’s launch to better explain the different types of common RT effects, and why some RT effects are more expensive than others.

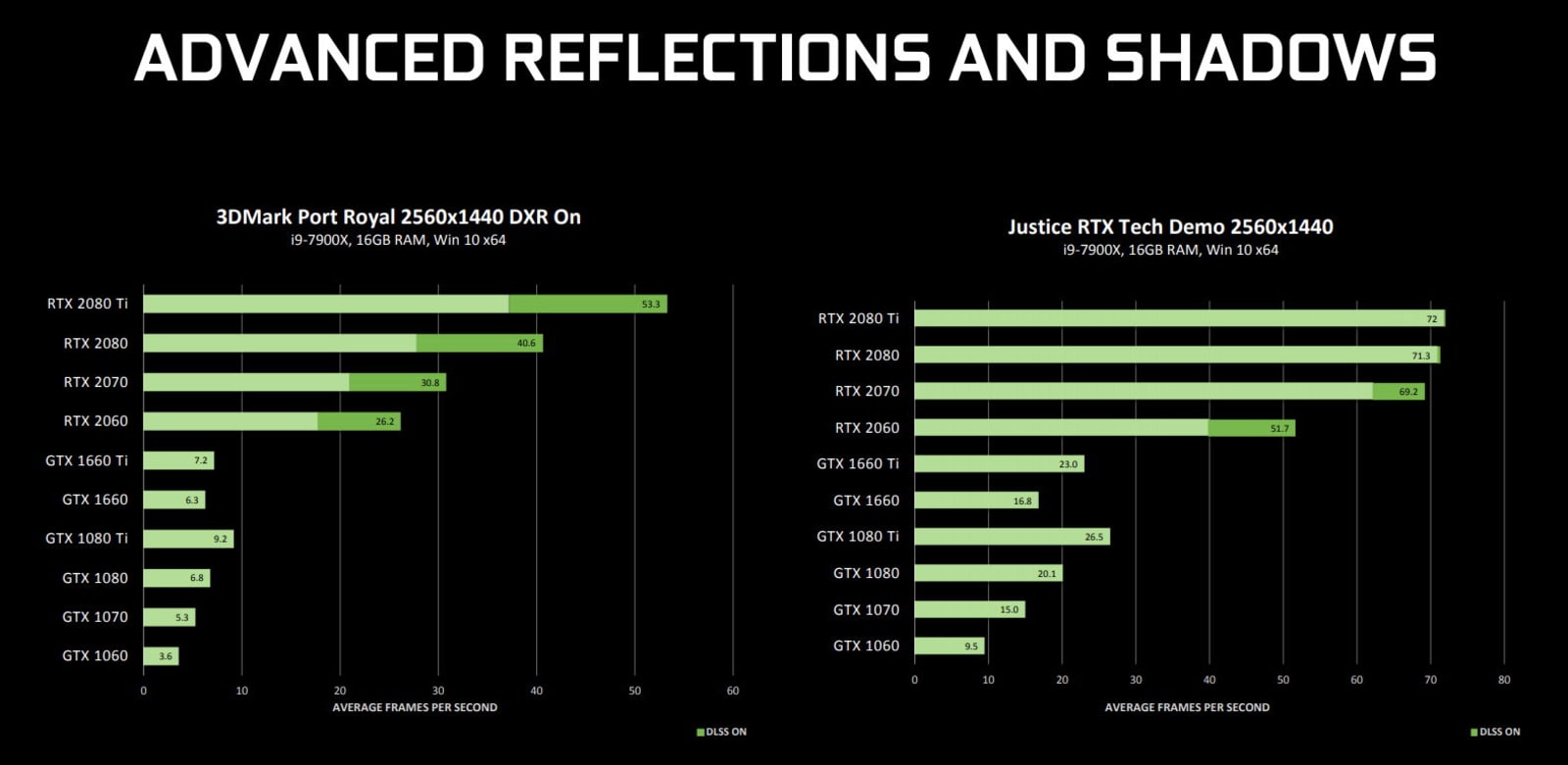

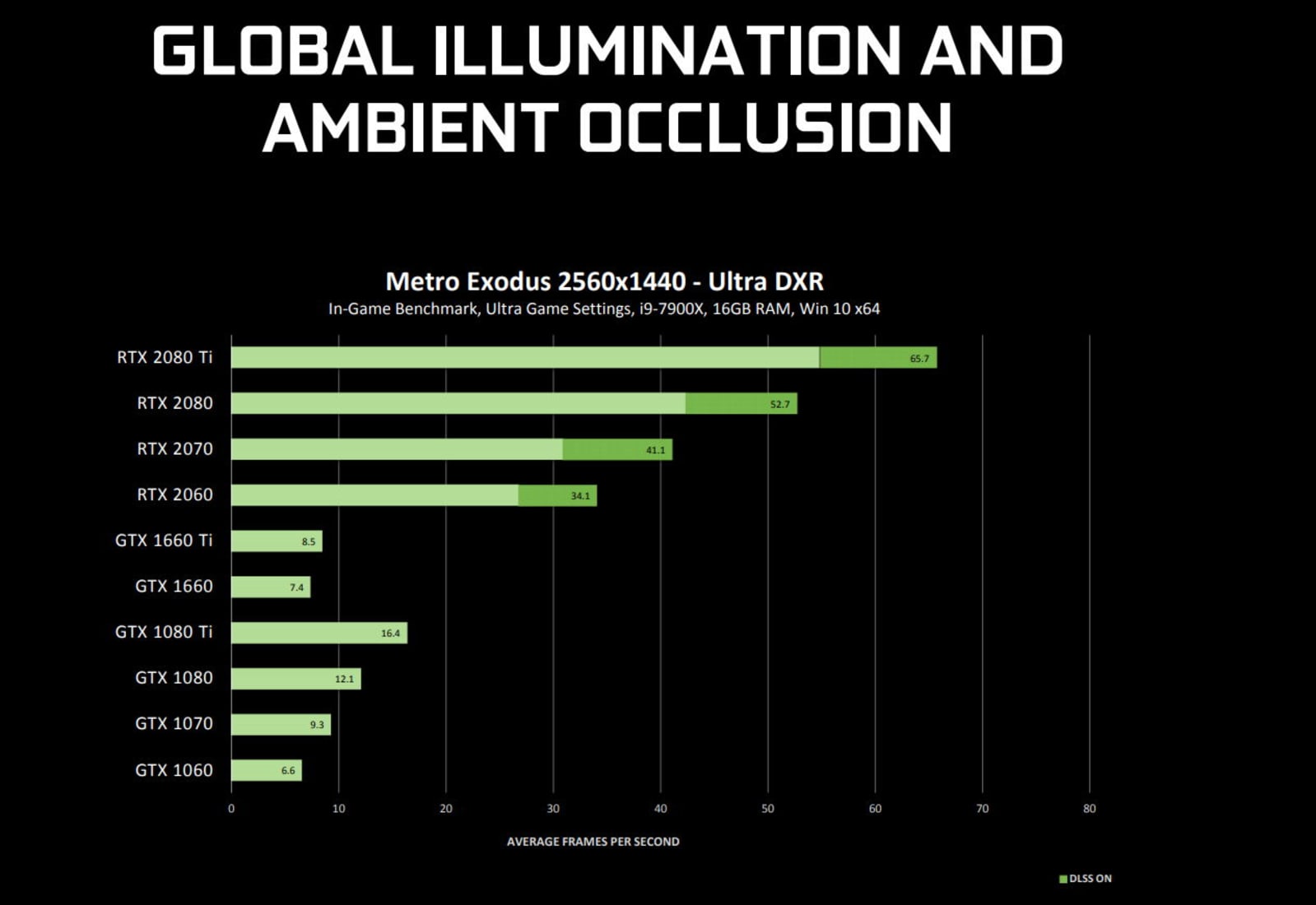

The long and short of it being that simple reflections and shadows can be had without terrible performance drops on cards that lack RT hardware, however the more rays an effect requires, the worse the performance hit gets (or perhaps, the better an RTX card would look). So particularly impressive effects like RT global illumination and accurate ambient occlusion are out, however cheap reflections (which are always a crowd pleaser) are more attainable.

This all varies with the game and the settings used, of course. NVIDIA’s been working with partners to improve their DXR effect implementations – an effort that’s actually been fairly successful over the last half-year, going by some of the earliest games – but it’s still a matter of tradeoffs depending on the game and video card used. Much to my own surprise however, NVIDIA says that they aren’t expecting to see game developers release patches to take into account DXR support on cards without RT hardware; this of course isn’t required since DXR abstracts away the hardware difference, however it’s up to developers to account for the performance difference. In this case, it sounds like game devs are satisfied that they’ve provided enough DXR quality settings that users will be able to dial things down for slower cards. But as always, the proof is in the results, which everyone will be able to see first-hand soon enough.

Ahead of this driver release, NVIDIA has put out some of their own performance numbers. And while they’re harmless enough, they are all done at 1440p with everything cranked up to Ultra quality, so they do present a sort of worst case scenario for cards without RT hardware. The RT quality settings GTX card owners will want to use will be much lower than what NVIDIA does here.

As a reminder, while NVIDIA’s DXR fallback layer is meant to target Pascal and Turing cards that lack RT hardware, not all of these cards are supported. Specifically, the low-end Pascal family isn’t included, so support starts with the GeForce GTX 1060 6GB, as well as NVIDIA’s (thus far) two GTX 16 series cards, the GTX 1660 and GTX 1660 Ti.

Overall the new driver is being released this morning at the same time as this news post goes up – 9am ET. And while NVIDIA hasn’t confirmed the driver build number or given the press an advanced look at the driver, this driver should be the first public driver in NVIDIA’s new Release 430(?) driver branch. In which case there’s going to be a lot more going on with this driver than just adding DXR support for more cards; NVIDIA’s support schedule calls for Mobile Kepler to be moved to legacy status this month, so I’m expecting that this will be the first driver to omit support for those parts. New driver branches are some of the most interesting driver releases from NVIDIA since these are normally the break points where they introduce new features under the hood, so I’m eager to see what they have been up to since R415/R418 was first released back in October.

DXR Tech Demo Releases: Reflections, Justice, and Atomic Heart

Along with today’s driver release, NVIDIA and its partners are also releasing a trio of previously announced/demonstrated DXR tech demos. These include the Star Wars Reflections demo, Justice, and Atomic Heart.

These demos have been screened extensively by NVIDIA, Epic, and others, so admittedly there’s nothing new to see that you wouldn’t have already seen in their respective YouTube videos. However as an aficionado for proper tech demo public binary releases – something that’s become increasingly rare these days (Tim, I need Troll!) – it’s great to see these demos finally released to the public. After all, seeing is believing; and seeing something rendered in real time is a lot more interesting than seeing a recoded video of it.

Anyhow, all three demos are going to be released through NVIDIA today. What I’m being told is that Reflections and Justice will be hosted directly by NVIDIA, whereas Atomic Heart will be hosted off-site, for anyone keeping the score. For NVIDIA of course it’s in their own best interests to put their best foot forward with RT, and to have something a bit more curated/forward-looking than the current crop of games; though I don’t imagine it hurts either that these demos should bring any GTX card to its knees rather quickly.

https://www.anandtech.com/show/14203/nvidia-releases-dxr-driver-for-gtx-cards

2019-04-11 13:01:52Z

52780266494158